Researchers develop VR software to control a robot proxy through natural movements

To date, virtual reality has been widely associated with gaming. But as this immersive technology improves, it will increasingly make its way to other spheres of life, including work, impacting how people miles apart or even across the world can collaborate.

One effort that could soon inform VR applications in professional settings comes from researchers at Brown University and Cornell University, who will present on the concept at this year's Association for Computing Machinery Symposium on User Interface Software and Technology on Oct. 29.

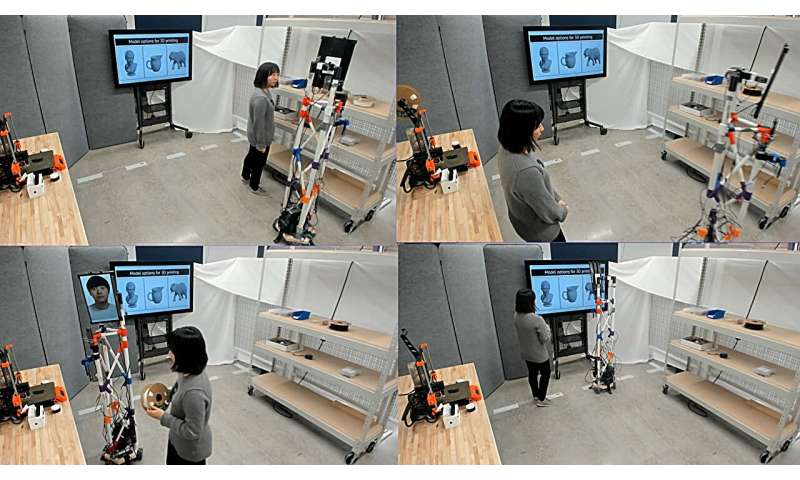

VRoxy, the software program developed by the team, allows a user in a remote location to put on a VR headset and be virtually transported to a space—be it an office, laboratory or other setting—where their colleagues are physically working. From there, the remote user is represented through a robot present at the physical location, allowing them to move through that environment using natural movements, like walking, and collaborate with colleagues through gestures such as pointing at objects, head animations (like nodding) and even making facial expressions through the robot proxy.

The software is in its early stages. But already the researchers say it has the potential to address some of the biggest challenges in using robot proxies and augmented reality software for remote collaboration.

"Right now, motion-controlled robots for collaboration require the physical location to have the same amount of space as the remote environment, but that's often not the case," said Brandon Woodard, a Ph.D. student at Brown and graduate researcher for the Department of Computer Science's Visual Computing Group. "Rooms have different dimensions. Even when meeting with people in Zoom, it's easy to see those differences. Some could be in home offices, others in kitchens or living rooms, while others are in the work space or classroom."

When remote users are in much smaller spaces than the physical locations they virtually visit, that presents a major stopping point for augmented reality technology in terms of full immersion and navigating that virtual space. For instance, a college student can't physically get to the other side of a large lab in which they are virtually present if their remote space is physically smaller, like a residence hall room. To do this with a mobile robot proxy, it requires users to manually maneuver the robot with some type of remote control, which draws some of their attention away.

"These actions require significant amounts of cognitive load," said Mose Sakashita, a Ph.D. student at Cornell and the study's lead author. "You have to focus on controlling the robot instead of focusing on the collaboration task."

VRoxy helps to address this, the researchers say. The primary way is by introducing an innovative mapping technique that addresses differences in room size, allowing a user to walk around in a small space while a mobile robot automatically reproduces their movement in a physical location.

Here's how it works: The remote space where the VR user will be and the physical space where they want to be are mapped using visualization software. The physical location is then rendered into a 3D model. This 3D environment is what the VR user sees in their headset. While in there, the user sees—as blue circles on the ground—what the researchers call teleport links. These links allow the larger space to be compressed into the smaller one within the VR world.

The VR user can step into these links and when they do, the screen will fade to black and they'll be in another spot. This could be slightly further into a room, the other side of that room or even another building. The robot proxy moves through the physical space as the user goes through the teleport links without the VR user needing to manually control it. If the user goes through a link to another building, another robot would activate.

"It's almost like if you're playing a video game, you go into a portal and you get warped somewhere but instead of the robot actually warping somewhere because it's in a real physical space, it just knows to navigate to the spots where the teleport links are," Woodard said.

The teleport links eventually brings the VR user to designated task areas. This is where the 3D digital world the VR user sees, which runs on the simulation engine Unity, fades away and a live feed of the environment turns on. In these spaces, the VR user can move around as if they were there and the proxy robot recreates their movements in that space using movement tracking software on the VR headset.

Currently, the VR user can point at objects, lean in close for better looks and even walk sideways along a shelf to look for objects. The system also captures head rotations and head nods. The Quest Pro headset the researchers are using can also capture facial expressions, which are shown through an attached screen on the prototype robot.

When not in the live-view, the collaborators in the physical space appear as 3D avatars to the VR user through the use of a motion sensing system called Azure Kinect. This helps the VR user understand where people are while navigating the space.

The researchers can see their software being used in collaborative, hands-on environments when someone physically can't be in the space where the work is happening, and the room they are in is much different than the in-person space.

Now that the initial proof of concept is complete, the research team hopes to build upon the software and the capabilities of the proxy robot, including it being able to grab and manipulate objects.

More information:

Mose Sakashita et al, VRoxy: Wide-Area Collaboration From an Office Using a VR-Driven Robotic Proxy, Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology (2023). DOI: 10.1145/3586183.3606743

Provided by Brown University