Exploring public opinion on liability in surgical robotics

Imagine a time in the future when all operations are performed by robots acting independently of people: You fall one day and break your ankle, and are told by your doctor that you need surgery. A robot carries out the operation. At first, it seems to have gone well. But when you visit the doctor two weeks after the operation for a follow-up appointment, X-rays show that the bone is in the wrong place and you will need another operation. Who should be held responsible for this?

Increasingly, robots have the potential to be more independent and learn from the work they do. Instead of being controlled by a person, these systems use sensors to guide them. The machine processes the data it gathers and can learn from it—changing how it functions in the future. This poses problems in trying to work out who is responsible when a robot makes a mistake and a person is harmed. This is particularly critical when robots are used for surgical procedures where people are vulnerable.

Working out who is responsible when a robot is involved in surgery is complicated because the use of robots in a procedure can vary greatly.

We propose a simple classification system that includes the full range of robotic systems:

- Human controlled robotic systems: These systems include robots that are completely controlled by the surgeon, who can sometimes work remotely (telesurgical robots).

- Robot-assisted systems: These systems help the surgeon carry out specific tasks such as stitching wounds.

- Autonomous robotic systems: Such systems can conduct entire surgical procedures with minimal or no human supervision.

The courts have considered cases in which people have been hurt by robots supervised by people—mainly when operating in factories. In these cases, the courts looked at whether or not the robot was working properly (product liability), if the employer was responsible (through inadequate training, for example) or if the injured person had put themselves at risk by ignoring or not properly following safety guidelines.

iRobotSurgeon video. Credit: Ammer Jamjoom Self-driving cars provide a useful model for working out liability issues in autonomous robotic systems. Surveys have revealed that members of the public are concerned about determining who would be held liable if there were an accident. The Moral Machine, an online platform, explored public attitudes to ethical dilemmas involving autonomous vehicles by posing scenarios in which out-of-control vehicles had to determine which members of the public to harm. The investigators found cross-cultural variation in moral preferences from around the world.

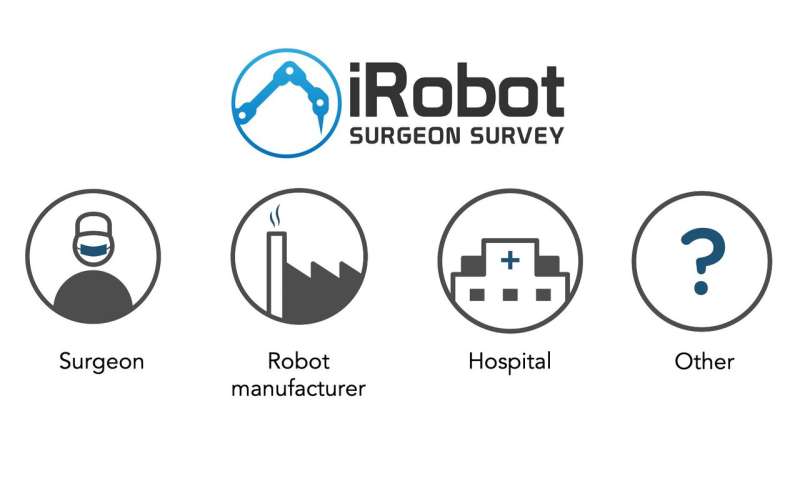

To address the responsibility dilemma in robotic surgery, we have created the iRobotSurgeon survey, which aims to explore public opinion toward liability as robotic surgical systems become increasingly autonomous. In the survey, respondents are posed with five scenarios in which the patient comes to harm, and determine who they feel is mostly responsible: the surgeon, the robot manufacturer, the hospital, or another party (Figure 1).

We have developed the survey through an iterative approach with input from clinicians, patients, ethicists and public engagement professionals. The scenarios are designed not to have a clear culpable actor, and aim to get the respondent to provide an answer based upon their intuition and who they feel shoulders the most responsibility.

The survey aims to understand how increasing autonomy impacts who the public views as liable in robotic surgery. Collectively, we hope the survey will shed light on this thorny issue, provide useful insights for regulators and policymakers, and direct future research.

This Dialog was written by Dr. Ammer Jamjoom and edited by John Davidson. It is a summary of the original comment cited below.

This story is part of Science X Dialog, where researchers can report findings from their published research articles. Visit this page for information about ScienceX Dialog and how to participate.

More information: Aimun A. B. Jamjoom et al. Exploring public opinion about liability and responsibility in surgical robotics, Nature Machine Intelligence (2020). DOI: 10.1038/s42256-020-0169-2

iRobotSurgeon survey website: www.iRobotSurgeon.com

Barfield, W. Liability for autonomous and artificially intelligent robots. Paladyn 9, 193–203 (2018).

Piao, J. et al. Public Views towards Implementation of Automated Vehicles in Urban Areas. Transp. Res. Procedia 14, 2168–2177 (2016).

Awad, E. et al. The Moral Machine experiment. Nature 563, 59–64(2018)

Gurney, J. K. Sue My Car Not Me: Products Liability and Accidents Involving Autonomous Vehicles. (2013).

Ammer Jamjoom is a clinician based in the Leeds Major Trauma Centre with an interest in surgical robotics.